My PC is folding but its hit and miss on the work units. So my PPD will fluctuate greatly.

I've edged the HBM2 up a bit to 1100Mhz though. idk if that'll help speed up what I do get but every little bit helps right?

The client should tell you what your theoretical potential PPD is while folding. If increasing your clocks makes a significant difference in that number, then by all means. Changing my clock speeds/voltages/etc. did not seem to equate to any noticeable difference even when I lowered speeds/voltages to the lowest the Radeon software would let me.

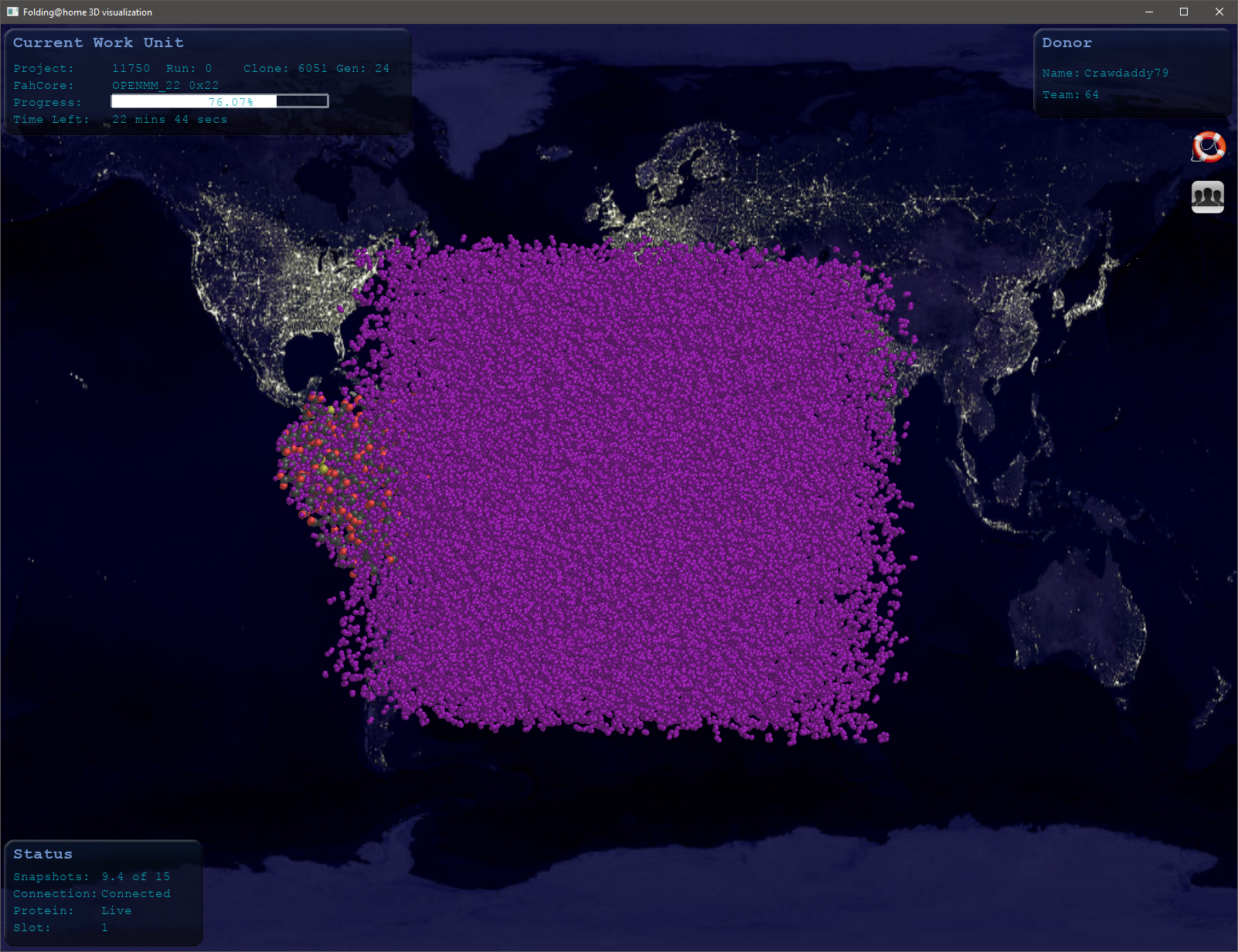

One good thing about the new client, the visual representation works now.

Check out this puppy:

1.7M PPD means nothing when it does this at least once a day. FWIW it typically hits 1.1M. Due to necessarily pausing while doing other things... my real average is much lower.

1.7M PPD means nothing when it does this at least once a day. FWIW it typically hits 1.1M. Due to necessarily pausing while doing other things... my real average is much lower. ).

).