Well, if Intel solves their driver problem, then I welcome more competition in discrete graphics.

I agree. Here’s hoping that things get better and that Intel doesn’t pull the plug.

Well, if Intel solves their driver problem, then I welcome more competition in discrete graphics.

Going by what GN said the hardware may not be bad but the drivers are awful and not ready for prime time.

I'm not sure I believe the problem is just drivers. Arc A380 looks like total **** if we're honest. It came out behind the RX 6400, which is a terrible card that was heavily panned at launch (as was the RX 6500 XT). The only silver lining for the 6400 was that it can be used in a single slot low profile build, because if you can handle a double slot low profile you're still better off with a 3+ year old GTX 1650. (Which also means the A380 is not competitive with a three year old low end card.)

Even if drivers are bad, how much of a performance penalty does that account for? Is it 50%? Personally I am skeptical of it being that large, and I don't think the underlying hardware could be very good if the card is losing to the 6400 while using a lot more power.

In some situations it can get close to a 3060, in most it does not.

3dmark isn't my favourite game

What I am still confused about is why did Intel go with a full production run if the product wasn't looking good? Shouldn't they have been able to tell from early silicon whether the cards were hitting anywhere near their targets? The whole thing just seems bizarre to me.

Or were they blinded by the Crypto bubble and figured even a crap card could sell under the circumstances?

Intel Arc A-Series Graphics Ray Tracing Technology Deep Dive

https://www.youtube.com/watch?v=J5eIOv-CrB8

Intel has some very competitive RT hardware.

At Intel Innovation, Intel CEO confirms that the A770 will cost 329 USD, which is indeed a very low price for this model. Here’s a reminder that A770 features a full ACM-G10 GPU with 32 Xe-Cores and either 8GB to 16GB memory. However, Intel’s Limited Edition only comes with 16GB VRAM. The $329 price may refer to the 8GB model.

Intel claims that their GPU offers up to 65% better peak performance versus competing products (presumably NVIDIA RTX 3060 series) in ray tracing.

Unfortunately, there is no information on A750 or A580 GPU availability yet, not to mention their prices. Ryan Shrout, who was the face behind Arc Alchemist marketing campaign, confirmed that we will hear more about A750 GPU later this week. Furthermore, Pat Gelsinger confirmed that A770 LE are now shipping to reviewers.

The Limited Edition will be sold by Intel and their etail and retail partners. The company released these new pictures presenting the packaging that we have seen in the teasers for the past few months.

CapFrameX

@CapFrameX

Intel Arc available Oct 12 starting at 329$. Samples already on their way to reviewers.

when do the reviews come out?

are these DoA?

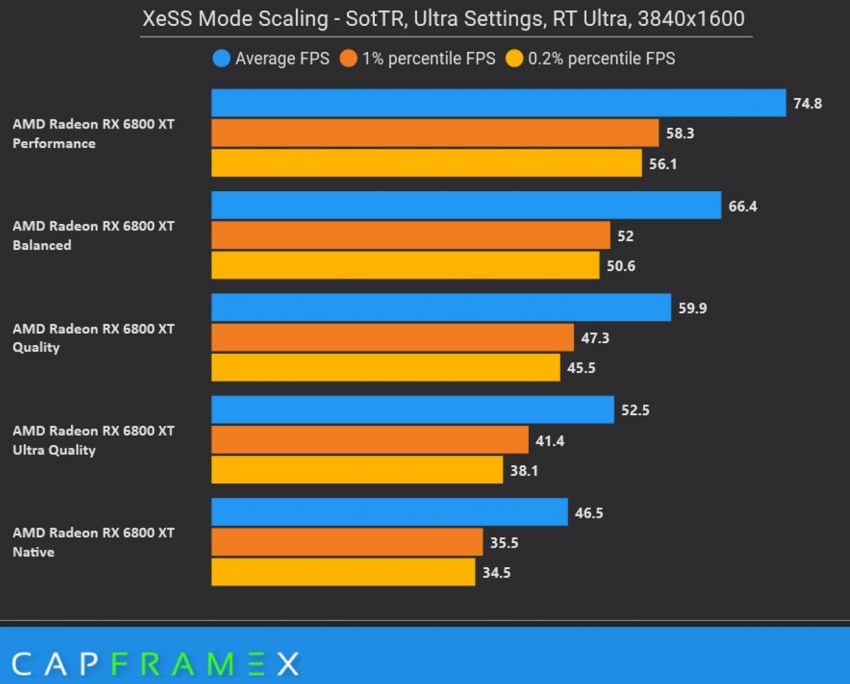

XeSS performance scaling all modes. RX 6800 XT stock. Shadow of the Tomb Raider, Ultra Settings, RT Ultra, 3840x1600

Intel expanded its Arc "Alchemist" desktop graphics card series with the entry-level Arc A310. This GPU has specs that enable Intel's AIB partners to build low-profile graphics cards that are possibly even single-slot, or conventional sized with fanless cooling. The A310 is being pushed as a slight upgrade over the iGPU, and an alternative to cards such as the AMD Radeon RX 6400. Its target user would want to build a 4K or 8K HTPC, or even be a workstation/HEDT user with a processor that lacks integrated graphics, and wants to use a couple of high-resolution monitors. There is no reference board design, but we expect it to look similar to the Arc Pro A40 in dimensions (pictured below), except with full-size DP and HDMI in place of those mDP connectors, and a full-height bracket out of the box.

The A310 is carved out of the 6 nm "ACM-G11" silicon by enabling 6 out of 8 Xe Cores (that's 96 out of 128 EUs, or 768 out of 1,024 unified shaders). You also get 96 XMX units that accelerate AI; and 6 ray tracing units. The GPU runs at 2.00 GHz, compared to 2.10 GHz on the A380. The memory sub-system has been narrowed by a third—you get 4 GB of 15.5 Gbps GDDR6 memory across a 64-bit wide memory interface. In comparison, the A380 has 6 GB of memory across a 96-bit memory bus. The card features a PCI-Express 4.0 x8 host interface, and with its typical power expected to be well under the 75 W-mark, most custom cards could lack any power connectors.

XeSS is now available in Shadow of the Tomb Raider, and it has already been published on Github. This surprising news comes on the same day Intel announces the launch date for its Arc A770 GPU. That said, XeSS actually become available before Intel has even shipped its high-end desktop Arc GPUs.

Shadow of the Tomb Raider is the first known game to support XeSS. The patch was released today and has been confirmed through Steam release notes:

Intel XeSS supports not only Arc GPUs, but also AMD Radeon and NVIDIA GeForce GPUs. The technology has already been confirmed to work and tested by CapFrameX with Radeon RX 6800XT GPU in SoTR:

Intel's highest-end graphics card lineup is approaching its retail launch, and that means we're getting more answers to crucial market questions of prices, launch dates, performance, and availability. Today, Intel answered more of those A700-series GPU questions, and they're paired with claims that every card in the Arc A700 series punches back at Nvidia's 18-month-old RTX 3060.

After announcing a $329 price for its A770 GPU earlier this week, Intel clarified that the company would launch three A700 series products on October 12: The aforementioned Arc A770 for $329, which sports 8GB of GDDR6 memory; an additional Arc A770 Limited Edition for $349, which jumps up to 16GB of GDDR6 at slightly higher memory bandwidth and otherwise sports otherwise identical specs; and the slightly weaker A750 Limited Edition for $289.

If you missed the memo on that sub-$300 GPU when it was previously announced, the A750 LE is essentially a binned version of the A770's chipset with 87.5 percent of the shading units and ray tracing (RT) units turned on, along with an ever-so-slightly downclocked boost clock (2.05 GHz, compared to 2.1 GHz on both A770 models).

Player 3 has entered the game! While technically the Iris Xe Max (DG1) is its first discrete GPU in decades, the new Arc 7 series marks Intel's first attempt ever at the performance graphics segment, a hotly-contested one where gamers playing at 1080p or 1440p pick up a graphics card to max out their eye-candy with AAA games, or give themselves extreme frame-rates for competitive gaming. The new Arc "Alchemist" 7-series promises just this, and with the metaverse taking shape, Intel is gripped with the fear of missing out on a potentially massive hardware market.

We have with us the Intel Arc A750 and the Arc A770 Limited Edition. Both these cards meet the performance-segment goals for a graphics card, and come with a full DirectX 12 Ultimate feature-set, including hardware-accelerated ray tracing, and a feature competitive to DLSS and FSR—XeSS. We'll tell you a lot more about these in our full review of the two cards. For now, we've been allowed to show you the cards themselves!