It shouldn't matter where the NVMe is. On the Maximus XI Formula, the single GPU slot (top slot) will feed 3.0 16x no matter the population of the M.2 slots.

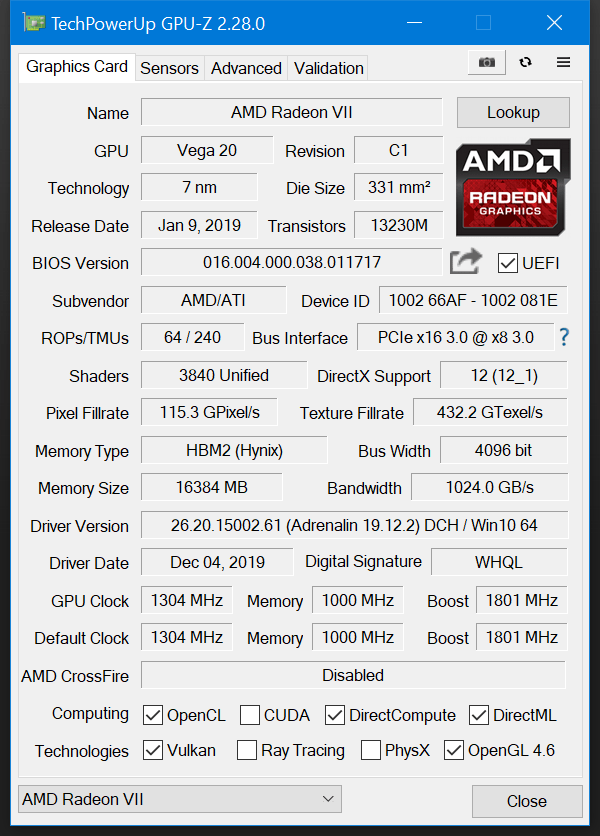

I'm not sure why the Radeon is acting up. Sounds like the 1080 issue is tied to your monitor being weird, which is fine; however it doesn't make much sense that the Radeon refuses to run above 3.0 8x. Have you tried running DDU to clean out any remnants of prior drivers? Not sure if you had an NV card prior to the AMD one, but that could be causing an issue, I suppose.

I'm not sure why the Radeon is acting up. Sounds like the 1080 issue is tied to your monitor being weird, which is fine; however it doesn't make much sense that the Radeon refuses to run above 3.0 8x. Have you tried running DDU to clean out any remnants of prior drivers? Not sure if you had an NV card prior to the AMD one, but that could be causing an issue, I suppose.

****!!

****!!

)

)